The issue

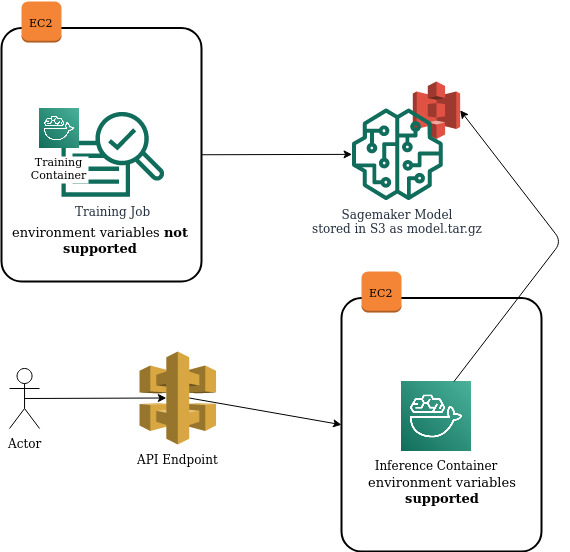

Recently I wanted to use environment variables in a Docker Container which is running a training job on AWS Sagemaker. Unfortunately using environment variables when starting the container is only possible for containers used for inference (which means the model has already been created). In the following diagram I visualized the main components of AWS Sagemaker and at which step environment variables are supported or not.

In this article I will describe how to use environment variables in a training job on AWS Sagemaker. As it’s not supported by AWS I only found a hackaround at stackoverflow (https://stackoverflow.com/questions/51215092/setup-env-variable-in-aws-sagemaker-container-bring-your-own-container). As it’s only described shortly at stackoverflow I created this article to add some comments to this solution. If you know a better way feel free to leave a comment 🙂

The solution

When you create a training job you can define hyperparameters for tuning the training. Inside the Docker Container it has to be placed at /opt/ml/input/config/hyperparameters.json

The idea of the workaround is to put the content of the environment variables to this file. An example is shown below:

{

"batch_size": 100,

"epochs": 10,

"learning_rate": 0.1,

"momentum": 0.9,

"log_interval": 100,

"aws_access_key_id": "ABCDEDF",

"aws_secret_access_key": "123456"

}

In this example I added two additional parameters called aws_access_key_id and aws_secret_access_key for passing IAM credentials to the container which for example later can be used by the AWS CLI or python script running in the container.

Now you can access the values using the tool jq:

jq -r ".aws_access_key_id" /opt/ml/input/config/hyperparameters.json

jq -r ".aws_secret_access_key" /opt/ml/input/config/hyperparameters.json

A complete example using this approach consists of these steps:

Create a bash script saved as exporting_vars.sh which exports the variables:

#!/bin/bash export AWS_ACCESS_KEY_ID=$(jq -r ".aws_access_key_id" /opt/ml/input/config/hyperparameters.json) export AWS_SECRET_ACCESS_KEY=$(jq -r ".aws_secret_access_key" /opt/ml/input/config/hyperparameters.json)

If the variables should be available not only inside the bash script exporting_vars.sh but also in the parent shell you have to source the script:

source exporting_vars.sh

You can do the sourcing e.g. in your main entrypoint script.

Now the variables are available the same way as passing same directly via Docker environment variables. Log in your container and try:

echo $AWS_SECRET_ACCESS_KEY echo $AWS_ACCESS_KEY_ID

I would really appreciate it if AWS supports environment variables for training jobs out of the box. Let’s see when this will happen.