The issue

I needed to create a job within a GitLab CI/CD Pipeline which calculates google’s Lighthouse score (https://developers.google.com/web/tools/lighthouse) for a specific website url containing the new code from this pipeline run. The calculated Lighthouse score results should be saved at a presigned AWS S3 url with a limited time to live (ttl). Only if the score is high enough the GitLab CI/CD pipeline should deploy the new code. With this additional step I wanted to avoid increased Lighthouse score on my website caused by the new deployment/release.

The solution

I’m running Lighthouse within a Docker container, so it’s easy to reuse it for other projects. As a starting point I needed chrome running in headless mode. On DockerHub I found this nice container:https://hub.docker.com/r/justinribeiro/chrome-headless/

Based on this container I added some environment variables to adjust Lighthouse and S3 configurations during runtime. This is my Dockerfile:

FROM justinribeiro/chrome-headless

# default values for environment variables

ENV URL=https://www.allaboutaws.com

ENV AWS_ACCESS_KEY_ID=EMPTY

ENV AWS_SECRET_ACCESS_KEY=EMPTY

ENV AWS_DEFAULT_REGION=EMPTY

ENV AWS_S3_LINK_TTL=EMPTY

ENV AWS_S3_BUCKET=EMPTY

ENV LIGHTHOUSE_SCORE_THRESHOLD=0.80

USER root

RUN apt-get update && \

apt-get install -y bc curl gnupg2 sudo && \

curl -sL https://deb.nodesource.com/setup_10.x | bash - && \

apt-get install -y nodejs && \

npm install -g lighthouse && \

curl -O https://bootstrap.pypa.io/get-pip.py && \

python get-pip.py && \

pip install awscli && \

apt-get purge --auto-remove -y python gnupg2 curl && \

rm -rf /var/lib/apt/lists/*

RUN mkdir /tmp/lighthouse_score && chown chrome:chrome /tmp/lighthouse_score

ADD ./run.sh /tmp/run.sh

ENTRYPOINT /bin/bash /tmp/run.sh

The environment variables in the Dockerfile are:

- ENV URL: the URL to run Lighthouse against

- ENV AWS_ACCESS_KEY_ID: AWS Access Key for storing results in S3

- ENV AWS_SECRET_ACCESS_KEY: AWS Secret Key for storing results in S3

- ENV AWS_DEFAULT_REGION: AWS Region for S3

- ENV AWS_S3_LINK_TTL: Time to live of presigned S3 URL

- ENV AWS_S3_BUCKET: Name of S3 bucket containing Lighthouse result

- ENV LIGHTHOUSE_SCORE_THRESHOLD: threshold for continuing or aborting GitLab CI/CD Pipeline regarding Lighthouse score

Run lighthouse and save results at presigned S3 URL

After installing all necessary packages the entrypoint bash script run.sh is started. The file is shown below:

#!/bin/bash

FILEPATH=/tmp/lighthouse_score

FILENAME=$(date "+%Y-%m-%d-%H-%M-%S").html

S3_PATH=s3://$AWS_S3_BUCKET/$FILENAME

echo "running lighthouse score against: " $URL

sudo -u chrome lighthouse --chrome-flags="--headless --disable-gpu --no-sandbox" --no-enable-error-reporting --output html --output-path $FILEPATH/$FILENAME $URL

if { [ ! -z "$AWS_ACCESS_KEY_ID" ] && [ "$AWS_ACCESS_KEY_ID" == "EMPTY" ]; } ||

{ [ ! -z "$AWS_SECRET_ACCESS_KEY" ] && [ "$AWS_SECRET_ACCESS_KEY" == "EMPTY" ]; } ||

{ [ ! -z "$AWS_DEFAULT_REGION" ] && [ "$AWS_DEFAULT_REGION" == "EMPTY" ]; } ||

{ [ ! -z "$S3_PATH" ] && [ "$S3_PATH" == "EMPTY" ]; } ;

then

printf "\nYou can find the lighthouse score result html file on your host machine in the mapped volume directory.\n"

else

echo "uploading lighthouse score result html file to S3 Bucket: $S3_PATH ..."

aws s3 cp $FILEPATH/$FILENAME $S3_PATH

if [ ! -z $AWS_S3_LINK_TTL ] && [ $AWS_S3_LINK_TTL == "EMPTY" ];

then

printf "\r\nSee the results of this run at (valid 24hrs (default) till the link expires):\n\n\r"

aws s3 presign $S3_PATH --expires-in 86400

printf "\n"

else

printf "\n\rSee the results of this run at (valid $AWS_S3_LINK_TTL till the link expires):\n\n\r"

aws s3 presign $S3_PATH --expires-in $AWS_S3_LINK_TTL

printf "\n"

fi

fi;

PERFORMANCE_SCORE=$(cat $FILEPATH/$FILENAME | grep -Po \"id\":\"performance\",\"score\":\(.*?\)} | sed 's/.*:\(.*\)}.*/\1/g')

if [ $(echo "$PERFORMANCE_SCORE > $LIGHTHOUSE_SCORE_THRESHOLD"|bc) -eq "1" ];

then

echo "The Lighthouse Score is $PERFORMANCE_SCORE which is greater than $LIGHTHOUSE_SCORE_THRESHOLD, proceed with the CI/CD Pipeline..."

exit 0

else

echo "The Lighthouse Score is $PERFORMANCE_SCORE which is smaller than $LIGHTHOUSE_SCORE_THRESHOLD, DON'T proceed with the CI/CD Pipeline. Exiting now."

exit 1

fi;

This script does the following:

- run Lighthouse in chrome with headless mode

- save the results as a html file in the container at /tmp/lighthouse_score using a file name containing the current date

- if the environment variables are set, upload the html to the specified S3 bucket and presign the file using the cli command aws s3 presign

- extract the performance score from the html file using grep and sed

- output a message text to proceed or stop the GitLab Pipeline depending on whether $PERFORMANCE_SCORE > $LIGHTHOUSE_SCORE_THRESHOLD and return the value 0 (proceed with GitLab pipeline) or 1 (don’t proceed with GitLab pipeline)

Integrate it into Gitlab CI/CD Pipeline

The GitLab CI/CD Job in gitlab-ci.yml could look like the following YAML snippet:

calculate_lighthouse_score:

stage: testing

image: docker:latest

only:

- dev

variables:

URL: https://allaboutaws.com

S3_REGION: us-east-1

S3_LINK_TTL: 86400

S3_BUCKET: MY-S3-BUCKET/lighthouse

LIGHTHOUSE_SCORE_THRESHOLD: "0.50"

script:

- docker pull sh39sxn/lighthouse-signed

- docker run -e URL=$URL \

-e AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY \

-e AWS_SECRET_ACCESS_KEY=$AWS_SECRET_KEY \

-e AWS_DEFAULT_REGION=$S3_REGION \

-e AWS_S3_LINK_TTL=$S3_LINK_TTL \

-e AWS_S3_BUCKET=$S3_BUCKET \

-e LIGHTHOUSE_SCORE_THRESHOLD=$LIGHTHOUSE_SCORE_THRESHOLD \

sh39sxn/lighthouse-signed:latest

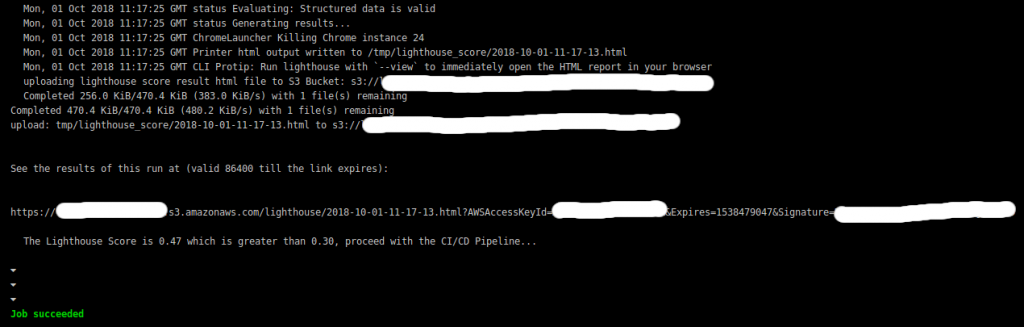

In GitLab logs you will see an output like:

Save Lighthouse results On your machine

If you want you can run the Docker container on your machine and get the results from the container as they are stored at /tmp/lighthouse_score within the container. You have to mount a directory from your host machine to the container using docker volumes. The run statement would be:

docker run -it -v /tmp:/tmp/lighthouse_score -e URL=https://allaboutaws.com sh39sxn/lighthouse-signed-s3:latest

You find the lighthouse result on your host machine at /tmp.

External Links

I uploaded the files to my GitHub Repo at https://github.com/sh39sxn/lighthouse-signed-s3 and the prebuild Container is saved in my DockerHub Repo at https://hub.docker.com/r/sh39sxn/lighthouse-signed-s3.